Do you happen to have Vector-relevant benchmarks in Scala.js?

No, I don’t think so. Most of our benchmarks are translations of language-agnostic ones, so they tend to use mutable collections. The only Scala-idiomatic benchmark we have is our own linker and optimizer, but we don’t use Vectors, rather Lists most of the time.

I’ve got some Scala.JS Vector benchmarks @ https://japgolly.github.io/scalajs-benchmark/

I haven’t upgraded them to the new Scala versions though. I’ll do that and get back to you soon…

Ah sorry, I can’t upgrade the benchmarks yet because:

[error] (benchmark / update) sbt.librarymanagement.ResolveException: Error downloading org.scala-js:scalajs-compiler_2.13.2-bin-b4428c8:0.6.32

[error] Not found

[error] Not found

[error] not found: /home/golly/.ivy2/local/org.scala-js/scalajs-compiler_2.13.2-bin-b4428c8/0.6.32/ivys/ivy.xml

[error] not found: https://repo1.maven.org/maven2/org/scala-js/scalajs-compiler_2.13.2-bin-b4428c8/0.6.32/scalajs-compiler_2.13.2-bin-b4428c8-0.6.32.pom

[error] not found: https://scala-ci.typesafe.com/artifactory/scala-integration/org/scala-js/scalajs-compiler_2.13.2-bin-b4428c8/0.6.32/scalajs-compiler_2.13.2-bin-b4428c8-0.6.32.pom

@sjrd is there a way to force using a binary incompatible version of Scala.js, i.e., use scala-js built with 2.13.1 (YOLO)? That trick very often works with compiler plugins, as binary breaking changes in the compiler are rare.

Yes, there is a way, but in this case it won’t help, because you’re still going to use the published version of scalajs-library.jar, which was built using the sources of the Scala library v2.13.1. So you’re not going to see the changes to Vector. The only way to see the changes to Vector is to locally publish a snapshot of Scala.js built with the specific Scala nightly version; at which point you also get the compiler plugin for the right version for free.

Thanks @sjrd for the pointer. I always forget how easy it is to build Scala.JS locally (in other words: good job!). I’ve built and published the benchmarks for Scala 2.13.2-bin-b4428c8.

…and I’ve got some mixed news: there are some cases where the new Vector implementation is a significant performance regression in JS-land. Prepending/appending elements is nearly 2x slower (i.e. 3x the original time), Vector.builder is a bit faster, .flatMap is significantly faster (40% duration reduction).

To reproduce my results,

- open up two tabs: 2.13.1 & 2.13.2-bin-b4428c8

- double-click one of the

Vectorbenchmarks (to deselect everything else) and then select the other twoVectorbenchmarks - hit

Start - switch tabs, repeat, compare results

Feel free to PR me any new benchmarks you’d like to see and I can recompile and republish. Each benchmark page has a link to the source and you can see they’re pretty concise and easy to write.

Thank hyou, @japgolly!

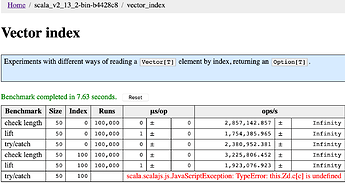

Also noteworthy: in the “Vector index” benchmark, on the “try/catch” benchmark, i get “scala.scalajs.js.JavaScriptException: TypeError: this.Zd.c[c] is undefined”.

For the benchmark numbers, I generally see the same as you mention, but it there are huge differences between browser. For example, the in the “collection building” benchmark

- Chrome seems to be really good with the old Vector, and gets up to 3x slowdown for the new one

- In Safari, the new is only slightly slower than the old Vector, beating chrome by far on the new (but slightly slower than Chrome on the old)

- Firefox is the slowest of all browsers, and gets up to 40% slower for the new Vector

On the balance, does this give us pause about going forward with the new Vector?

I think it probably shouldn’t, because:

- 90%+ of Scala usage is on the JVM

- some of the slowdowns on Scala.js are dismayingly large, but some of the speedups on the JVM are large, too

- in general, on any platform, users who are manipulating large amounts of data and really care about performance may need to make platform-specific and Scala-version-specific decisions about what data structures to use; this is just one example of that.

Regardless, the benchmark numbers will remain valuable as guidance for Scala.js users who care about performance — thanks, David, for that.

The final call on this isn’t mine to make, but we do need to make a call before much longer, as the last big 2.13.2 PR recently landed so we might be ready for release as early as next week.

I suppose it’s not an option to have both implementations in stdblib, and maybe even somehow default JVM to one and JS to the other (but both implementations exist everywhere and can be accessed directly if needed)?

I was hoping the results might elicit some insight from @sjrd about what the story is, and/or the PR author would jump in and confirm the JVM-side is (or isn’t) fine.

I’d suggest that before signing off on 2.13.2 we understand whether the slowdown we’re seeing only occurs in JS-land, or whether it occurs on the JVM too.

If it’s a JS-only slowdown then it would make sense to release it and then probably the Scala.JS team will wave their magic wand over time to provide further optimisations. If it’s the case that I’m benchmarking cases that haven’t been considered and it’s a universal slowdown affecting the JVM too, then it might be an idea to work out what the fix will require before we lock ourselves in to binary backward-compatibility.

My feeling is it shouldn’t block the rollout. Not only is JVM Scala much bigger, I would also argue:

-

The use cases are also a lot more performance sensitive. Frontend code tends to be more limited by complexity than perf

-

And a lot of browser work that is performance sensitive is not bound on Scala.js execution speed: script download speed, script parsing speed, JS library speed (e.g. React), network round trips (front code tends to be ajaxy), DOM perf, CSS rendering perf (my personal most recent Scala.js perf battle)

-

Browser perf is a mercurial thing. While the JVM behaves the same yesterday as today, browser optimizations change rapidly and perf for the same code can swing around massively over time, nevermind different browsers. IME the standard perf advice is to try to optimize code “semantically”, but not to micro-optimize with the browser optimizer in mind as such wins or losses tend to be fleeting.

This is pure speculation, but a possible reason for the performance regression is the new design with 7 subclasses. Vector is final in 2.13.1, so operations on a vector (e.g., appended) have a single implementation. In the new design, each subclass overrides appended.

flatMap is not overridden in Vector, the implementation uses the new VectorBuilder which was heavily optimized and brings significant perormance benefits compared to the old vector on JVM. This seems to translate to JS too.

More concerning than the performance regression is the TypeError: this.Zd.c[c] is undefined that shows up on one of the benchmarks – @sjrd, what are your thoughts on this?

Doesn’t Scala.js already replace some small parts of the std library with custom implementations? But maintaining a custom implementation of something as complex as Vector might not be feasible.

I don’t know enough about the implementations (old and new) of Vector to make any reasonable guess.

In general, significant performance profile differences between JVM and JS can be observed for two main reasons:

- Preventing Scala.js from inlining and stack allocating. The JVM does this at run-time based on profiles. Scala.js can only do that at link time using static information, but when it can, it’s better at it. Sometimes a method wouldn’t be inline by the JVM, possibly preventing class allocation, but Scala.js would inline it. Making the code profile different could still not inline on the JVM but also would prevent Scala.js from inlining as well. This can result in performance drops in Scala.js that are not observable on the JVM.

- Type tests on interfaces. They are slow on JS. Every time you do a type test with an interface type to “optimize” some code paths, it makes it worse on JS.

More concerning than the performance regression is the

TypeError: this.Zd.c[c] is undefinedthat shows up on one of the benchmarks – @sjrd, what are your thoughts on this?

Is this only in fullOpt mode? What’s the corresponding error message in fastOpt mode? The message would more intelligible. This looks like an ArrayIndexOutOfBoundsException to me, but the fastOpt code would show that for sure (because they’re checked in fastOpt mode).

I suppose it’s not an option to have both implementations in stdblib, and maybe even somehow default JVM to one and JS to the other (but both implementations exist everywhere and can be accessed directly if needed)?

No, it’s not an option, because I don’t have the bandwidth nor knowledge to maintain an entirely different version of Vector.

Huh! ![]() It appears the new

It appears the new Vector intentionally catches ArrayIndexOutOfBoundsExceptions. That is a big big no-no in Scala.js! It won’t work. AIIOBEs are undefined behavior. This is absolutely, utterly terrible.

Please don’t ship that!

Also, this can explain the performance regressions in JS, because try..catches are expensive in JS, even when they don’t actually catch anything.

OK, let me tone that down a bit. It only catches ArrayIndexOutOfBoundsExceptions to then throw more IndexOutOfBoundsExceptions and NoSuchElementExceptions. So cases for UBE in Scala.js would throw UBEs in fastOpt (instead of IIOBE) but that can be considered acceptable. It does mean that the contract of Vector is worse in Scala.js: calling .head on an empty vector is UB, now, instead of reliably throwing NoSuchElementException. The error message of the UB is also worse because it mentions internal implementation details of an array index exception, instead of a NoSuchElementException. NoSuchElementExceptions were never considered UB before, so this is a breach of portability contract nevertheless.

It could still explain the performance regressions.

Another possibility is that mega-morphic dispatch of the new Vector is problematic on some JIT’s but less so on others. The old vector implementation specifically avoided generating subclasses for simple cases in order not to run into that problem. Maybe the JVM has gotten better at megamorphic dispatch (Graal certainly falls into that camp), but JS JITs haven’t? I am only guessing here.

I submitted the issue https://github.com/scala/bug/issues/11920 as well as two PRs https://github.com/scala/scala/pull/8827 and https://github.com/scala/scala/pull/8828.