Original post:

This question is formulated in Scala 3/Dotty but should be generalised to any language NOT in MetaML family.

The Scala 3 macro tutorial:

https://docs.scala-lang.org/scala3/reference/metaprogramming/macros.html

Starts with the The Phase Consistency Principle, which explicitly stated that free variables defined in a compilation stage CANNOT be used by the next stage, because its binding object cannot be persisted to a different compiler process:

… Hence, the result of the program will need to persist the program state itself as one of its parts. We don’t want to do this, hence this situation should be made illegal

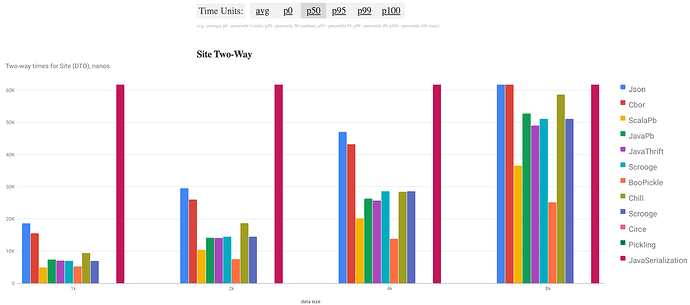

This should be considered a solved problem given that many distributed computing frameworks demands the similar capability to persist objects across multiple computers, the most common kind of solution (as observed in Apache Spark) uses standard serialisation/pickling to create snapshots of the binded objects (Java standard serialization, twitter Kryo/Chill) which can be saved on disk/off-heap memory or send over the network.

The tutorial itself also suggested the possibility twice:

One difference is that MetaML does not have an equivalent of the PCP - quoted code in MetaML can access variables in its immediately enclosing environment, with some restrictions and caveats since such accesses involve serialization. However, this does not constitute a fundamental gain in expressiveness.

In the end,

ToExprresembles very much a serialization framework

Instead, Both Scala 2 & Scala 3 (and their respective ecosystem) largely ignores these out-of-the-box solutions, and only provide default methods for primitive types (Liftable in scala2, ToExpr in scala3). In addition, existing libraries that use macro relies heavily on manual definition of quasiquotes/quotes for this trivial task, making source much longer and harder to maintain, while not making anything faster (as JVM object serialisation is an highly-optimised language component)

What’s the cause of this status quo? How do we improve it?